SAMIR MARDOLKER elaborates on how AI risks freezing history in place – by keeping today’s voices louder than tomorrow’s

The real danger of AI isn’t rogue machines – it is powerful people refusing to log off. Unlike past centuries, where big ideas came and went, today’s leaders are more obsessed with control than progress. If AI becomes their tool, history might stop moving forward and start running on loop. The answer? Not resisting AI, but thinking differently about it. As this write-up explores, divergent thinking – even the absurd, like AI Gandhi – helps us see risks before they trap us. Recognizing these risks allows us to set the right guardrails, ensuring AI is harnessed for progress, not just preservation. Because if we don’t, the loudest voices of today might just become the only voices of tomorrow.

Has this century earned the right to be preserved?

Let’s start with a slightly uncomfortable question: Has the 21st century produced any thinking, decision-making, or action worth preserving for the ages? Is there a GLOBAL leader, a philosophy, a moment in time we’d want AI to study, optimize, and carry forward into eternity?

Tough one, isn’t it? If the answer is no (and it’s looking like a no), then congratulations – humanity is currently running on fumes:)! Sure, we have had breakthroughs in technology, but what about ideas? Instead of bold, transformative thinking, we have leaders more interested in controlling narratives than creating new ones.

And that brings us to the real problem: AI isn’t the enemy. The enemy is what certain humans will do with AI.

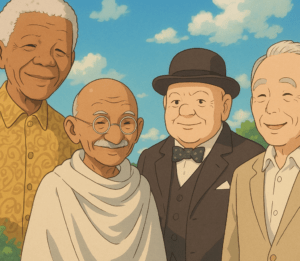

The 1900s had it. The 2000s? Not so much. The 20th century gave us thinkers and doers – people who didn’t just play the game; they rewrote the rules. The Mahatma Gandhis, the Winston Churchills, the Lee Kuan Yews, the Mandelas. Their influence was so profound that it still lingers today –but crucially; it is fading, as it should. That’s how progress works: new ideas emerge, and the past gradually steps aside.

What if we just… brought them back?

The absence of great, world-shaping ideas today leaves a vacuum. And nature –especially human – abhors a vacuum. So, what if instead of waiting for the next great thinker, we just recreated the ones we already had?

–especially human – abhors a vacuum. So, what if instead of waiting for the next great thinker, we just recreated the ones we already had?

Imagine an AI-powered Gandhi 2.0 to negotiate peace. A Lee Kuan Yew chatbot advising Singaporeans. A Churchill app delivering speeches, rallying nations in crisis.

Would AI versions of these leaders actually be better than the originals? They wouldn’t get tired, wouldn’t hold grudges, wouldn’t have to deal with scandals, health issues, or the occasional questionable speech.

A preposterous idea? Maybe. But it’s also the kind of divergent thinking that helps us understand where AI could go right – and where it could go terribly, terribly wrong.

The Real Danger: Who actually uses this technology?

The unsettling truth is that it won’t be the Gandhis who use AI to extend their influence. It’ll be the power-hungry cult leaders, politicians, and billionaires – the ones who refuse to let go.

And today’s world is perfectly structured to make their influence last forever. The more polarized our thinking gets, the easier it is to feed into an AI model. Binary thinking – us vs. them, left vs. right, agree or cancel – is exactly what AI understands best. Combine this with rapid advancements in processing power, and we get:

• Leaders who never truly leave because their AI versions keep making decisions.

• Political parties running AI clones of past leaders indefinitely.

• Business founders whose philosophies dictate strategy long after their companies should have evolved.

We aren’t progressing forward. We are getting stuck in a loop. AI won’t take over. But some humans will.

Let’s be clear: AI is not the risk. The risk is how those in power will shape it. The people most likely to use AI in this way are the ones already in control. They have the data. They have the technology. And they have every reason to preserve themselves, not progress.

We feared AI would replace humans. The real concern is that it won’t replace them at all. Instead, it will lock us into a world where today’s most powerful voices become the only voices of tomorrow.

What’s the way forward?

Powerful technologies will keep emerging–AI today, something else tomorrow. The real question is: Do we have the right thinking in place to guide them?

The only way to ensure that AI serves progress instead of preservation is by encouraging divergent thinking–the kind that explores absurd ideas (yes, like AI Gandhi) to surface new possibilities and risks.

Because when we take time to explore, we can set the right guardrails. And if we had done this sooner, we wouldn’t already be struggling with AI models that aren’t purely fact-driven, but influenced by governments and those in power.

And if that sounds like something in the distant future – think again. Just look at the DeepSeek vs. ChatGPT debate. The models are powerful. The influence behind them? Even more so. And with great power comes great responsibility – especially when it comes to training them well.